Introduction¶

Global Ice Velocities¶

The Inter-mission Time Series of Land Ice Velocity and Elevation (ITS_LIVE) project facilitates ice sheet, ice shelf and glacier research by providing a globally comprehensive and temporally dense multi-sensor record of land ice velocity and elevation with low latency.

Velocity granules¶

All image pairs are processed using the JPL autonomous Repeat Image Feature Tracking algorithms (auto-RIFT), first presented in (Gardner et al., 2018). Release v00.0 of the ITS_LIVE velocity mosaics use auto-RIFT Version 1. This ITS_LIVE data set uses surface displacements generated by image pairs in repeat orbits, and image pairs generated by overlap areas of adjacent or near-adjacent orbits. Image pairs collected from the same satellite position (“same-path-row”) are searched if they have a time separation of fewer than 546 days. This approach was used for all satellites in the Landsat series (L4 to L8). To increase data density prior to the launch of Landsat 8, images acquired from differing satellite positions, generally in adjacent or near-adjacent orbit swaths (“cross-path-row”), are also processed if they have a time separation between 10 and 96 days and an acquisition date prior to 14 June 2013(beginning of regular Landsat 8 data). Feature tracking of cross-path-row image pairs produces velocity fields with a lower signal-to-noise ratio due to residual parallax from imperfect terrain correction. Same-path-row and cross-path-row preprocessed pairs of images are searched for matching features by finding local normalized cross-correlation (NCC) maxima at sub-pixel resolution by oversampling the correlation surface by a factor of 16 using a Gaussian kernel. A sparse grid pixel-integer NCC search (1/16 of the density of full search grid) is used to determine areas of coherent correlation between image pairs. For more information, see the Normalized Displacement Coherence (NDC) filter described in Gardner et al. (2018)

Coverage¶

Scene-pair velocities generated from satellite optical and radar imagery.

Coverage: All land ice

Date range: 1985-present

Resolution: 240m

Scene-pair separation: 6 to 546 days

Search API¶

The itslive-search API hosted at NSIDC allows us to find granules of interest. Because of its high temporal and spatial resolution, ITS_LIVE data amounts to 8+ million NetCDF files stored in AWS S3.

Note: This API returns a list of the granule’s URLs on AWS S3 that match our search criteria not the actual data itself.

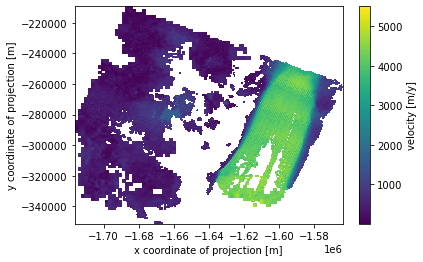

The main parameters are the area and time of interest and others that are dataset-especific like the percentage of valid data on each image pair. To visualize how the spatial matching works take a look at the following figure.

Spatial matching¶

ITS_LIVE data com from multiple scenes and satellites, which means a lot of overlap. In this case all the scenes that match with our spatial criteria will be returned.

For practical reasons, it’s better to search on a glacier scale rather than larger areas. This notebook will use Pine Island Glacier for demo purposes.

Search parameters¶

polygon/bbox: LON-LAT pairs separated by a comma.

start: YYYY-mm-dd start date

end: YYYY-mm-dd end date

min_separation: minimum days of separation

max_separation: maximum days of separation

percent_valid_pixels: % of valid glacier coverage

serialization: json,html,text

itslive-search via CURL¶

We can directly query the itslive-search API using curl. i.e.

curl -X GET "https://nsidc.org/apps/itslive-search/velocities/urls/?polygon=-68.0712890625%2C-69.77135571628376%2C-65.19287109375%2C-69.77135571628376%2C-65.19287109375%2C-68.19605229820671%2C-68.0712890625%2C-68.19605229820671%2C-68.0712890625%2C-69.77135571628376&start=2000-01-01&end=2020-01-01&percent_valid_pixels=80" -H "accept: application/json"

If you want to query our API directly using your own software here is the OpenApi endpoint https://nsidc.org/apps/itslive-search/docs

For questions about this notebook and the dataset please contact users services at nsidc@nsidc.org

from SearchWidget import map

# horizontal=render in notebook. vertical = render in sidecar

m = map(orientation='horizontal')

m.display()

Workflow¶

If you use the widget to download the velocity granules, you can go all the way to Data Processing section.

if you prefer to use code you can execute the following cells.

# you can also define your own parameters and skip the widget.

# Pine Island Glacier

params = {

'polygon': '-101.1555,-74.7387,-99.1172,-75.0879,-99.8797,-75.46,-102.425,-74.925,-101.1555,-74.7387',

'start': '1984-01-01',

'end': '2020-01-01',

'percent_valid_pixels': 30,

'min_separation': 6,

'max_separation': 120

}

granule_urls = m.Search(params)

print(f'Total granules found: {len(granule_urls)}')

Querying: https://nsidc.org/apps/itslive-search/velocities/urls/?polygon=-101.1555,-74.7387,-99.1172,-75.0879,-99.8797,-75.46,-102.425,-74.925,-101.1555,-74.7387&start=1984-01-01&end=2020-01-01&percent_valid_pixels=30&min_interval=6&max_interval=120

Total granules found: 2246

Filtering¶

More than a thousand granules doesn’t seem much but it’s not trivial if you only want to get a glance of the behavior of a particular glacier over the years. For this reason we can limit the number of granules per year and download only those with a given month as a middate, this is useful if the glacier is affected by seasonal cycles.

# filter_urls requires a list of urls, the result is stored in the m.filtered_urls attribute

filtered_granules_by_year = m.filter_urls(granule_urls,

max_files_per_year=10,

months=['November', 'December', 'January'],

by_year=True)

# We print the counts per year

for k in filtered_granules_by_year:

print(k, len(filtered_granules_by_year[k]))

print(f'Total granules after filtering: {len(m.filtered_urls)}')

1996 2

1997 1

2000 3

2001 10

2002 10

2003 7

2005 2

2006 4

2007 10

2008 10

2009 10

2010 10

2011 10

2012 10

2013 10

2014 10

2015 10

2016 10

2017 10

2018 10

2019 10

Total granules after filtering: 169

# This one only caps the number of granules per year

filtered_granules_by_year = m.filter_urls(granule_urls,

max_files_per_year=10,

by_year=True)

# We print the counts per year

for k in filtered_granules_by_year:

print(k, len(filtered_granules_by_year[k]))

print(f'Total granules after filtering: {len(m.filtered_urls)}')

1996 2

1997 5

2000 4

2001 10

2002 10

2003 10

2004 2

2005 2

2006 4

2007 10

2008 10

2009 10

2010 10

2011 10

2012 10

2013 10

2014 10

2015 10

2016 10

2017 10

2018 10

2019 10

Total granules after filtering: 179

Downloading data¶

We have 2 options to download data, we can download filtered urls (by year or as a whole) or we can donload a whole set of URLs returned in our original search.

Single year example:

files = m.download_velocity_granules(urls=filtered_granules_by_year['2006'],

path_prefix='data/pine-glacier-2006',

params=params)

The path_prefix is the dorectory on which the netcdf files will be downloaded to and params is to keep track of which parameters were used to download a particular set of files.

We can also download the whole series

files = m.download_velocity_granules(urls=m.filtered_urls,

path_prefix='data/pine-glacier-1996-2019',

filtered_urls=params)

filtered_urls = m.filtered_urls # or filtered_granules

project_folder = 'data/pine-1996-2019'

# if we are using our parameters (not the widget) we asign our own dict i.e. params=my_params

files = m.download_velocity_granules(urls=filtered_urls,

path_prefix=project_folder,

params=params)

Dataset structure¶

TODO: dataset summary¶

LE07_L1GT_001113_20121118_20161127_01_T2_X_LE07_L1GT_232113_20121104_20161127_01_T2_G0240V01_P059.nc

import xarray as xr

velocity_granule = xr.open_dataset('data/LE07_L1GT_001113_20121118_20161127_01_T2_X_LE07_L1GT_232113_20121104_20161127_01_T2_G0240V01_P059.nc')

velocity_granule

<xarray.Dataset>

Dimensions: (x: 636, y: 592)

Coordinates:

* x (x) float64 -1.716e+06 -1.716e+06 ... -1.564e+06

* y (y) float64 -2.094e+05 -2.096e+05 ... -3.512e+05

Data variables:

vx (y, x) float32 ...

vy (y, x) float32 ...

v (y, x) float32 ...

chip_size_width (y, x) float32 ...

chip_size_height (y, x) float32 ...

interp_mask (y, x) uint8 ...

img_pair_info |S1 b''

Polar_Stereographic |S1 b''

Attributes:

GDAL_AREA_OR_POINT: Area

Conventions: CF-1.6

date_created: 31-Mar-2020 01:45:13

title: autoRIFT surface velocities

author: Alex S. Gardner, JPL/NASA

institution: NASA Jet Propulsion Laboratory (JPL), Californi...

scene_pair_type: optical

motion_detection_method: feature

motion_coordinates: map- x: 636

- y: 592

- x(x)float64-1.716e+06 ... -1.564e+06

- units :

- m

- standard_name :

- projection_x_coordinate

- long_name :

- x coordinate of projection

array([-1716367.5, -1716127.5, -1715887.5, ..., -1564447.5, -1564207.5, -1563967.5]) - y(y)float64-2.094e+05 ... -3.512e+05

- units :

- m

- standard_name :

- projection_y_coordinate

- long_name :

- y coordinate of projection

array([-209392.5, -209632.5, -209872.5, ..., -350752.5, -350992.5, -351232.5])

- vx(y, x)float32...

- units :

- m/y

- standard_name :

- x_velocity

- stable_count :

- 31403.0

- stable_shift :

- 2.0

- stable_shift_applied :

- 2.0

- stable_rmse :

- 186.74

- stable_apply_date :

- 737881.0730667774

- map_scale_corrected :

- 1

- grid_mapping :

- Polar_Stereographic

[376512 values with dtype=float32]

- vy(y, x)float32...

- units :

- m/y

- standard_name :

- y_velocity

- stable_count :

- 31403.0

- stable_shift :

- 2.0

- stable_shift_applied :

- 2.0

- stable_rmse :

- 228.24

- stable_apply_date :

- 737881.0730667933

- map_scale_corrected :

- 1

- grid_mapping :

- Polar_Stereographic

[376512 values with dtype=float32]

- v(y, x)float32...

- units :

- m/y

- standard_name :

- velocity

- map_scale_corrected :

- 1

- best_practice :

- velocities should always be merged/averaged using component velocities to prevent high velocity magnitude bias

- grid_mapping :

- Polar_Stereographic

[376512 values with dtype=float32]

- chip_size_width(y, x)float32...

- units :

- m

- chip_size_coordinates :

- image projection geometry: width = x, height = y

- standard_name :

- chip_size_width

- grid_mapping :

- Polar_Stereographic

[376512 values with dtype=float32]

- chip_size_height(y, x)float32...

- units :

- m

- chip_size_coordinates :

- image projection geometry: width = x, height = y

- standard_name :

- chip_size_height

- grid_mapping :

- Polar_Stereographic

[376512 values with dtype=float32]

- interp_mask(y, x)uint8...

- units :

- binary

- standard_name :

- interpolated_value_mask

- grid_mapping :

- Polar_Stereographic

[376512 values with dtype=uint8]

- img_pair_info()|S1...

- mission_img1 :

- L

- sensor_img1 :

- E

- satellite_img1 :

- 7.0

- correction_level_img1 :

- L1GT

- path_img1 :

- 1.0

- row_img1 :

- 113.0

- aquisition_date_img1 :

- 20121118

- processing_date_img1 :

- 20161127

- collection_number_img1 :

- 1.0

- collection_catagory_img1 :

- T2

- mission_img2 :

- L

- sensor_img2 :

- E

- satellite_img2 :

- 7.0

- correction_level_img2 :

- L1GT

- path_img2 :

- 232.0

- row_img2 :

- 113.0

- aquisition_date_img2 :

- 20121104

- processing_date_img2 :

- 20161127

- collection_number_img2 :

- 1.0

- collection_catagory_img2 :

- T2

- date_dt :

- 14.0

- date_center :

- 20121111

- roi_valid_percentage :

- 59.0

- autoRIFT_software_version :

- 1.0

array(b'', dtype='|S1')

- Polar_Stereographic()|S1...

- grid_mapping_name :

- polar_stereographic

- straight_vertical_longitude_from_pole :

- 0.0

- latitude_of_projection_origin :

- -90.0

- latitude_of_origin :

- -71.0

- scale_factor_at_projection_origin :

- 1.0

- false_easting :

- 0.0

- false_northing :

- 0.0

- semi_major_axis :

- 6378.137

- semi_minor_axis :

- 6356.752

- inverse_flattening :

- 298.257223563

- GeoTransform :

- -1716487.5 240.0 0 -209272.5 0 -240.0

- spatial_ref :

- PROJCS["WGS 84 / Antarctic Polar Stereographic",GEOGCS["WGS 84",DATUM["WGS_1984",SPHEROID["WGS 84",6378137,298.257223563,AUTHORITY["EPSG","7030"]],AUTHORITY["EPSG","6326"]],PRIMEM["Greenwich",0,AUTHORITY["EPSG","8901"]],UNIT["degree",0.0174532925199433,AUTHORITY["EPSG","9122"]],AUTHORITY["EPSG","4326"]],PROJECTION["Polar_Stereographic"],PARAMETER["latitude_of_origin",-71],PARAMETER["central_meridian",0],PARAMETER["scale_factor",1],PARAMETER["false_easting",0],PARAMETER["false_northing",0],UNIT["metre",1,AUTHORITY["EPSG","9001"]],AXIS["Easting",EAST],AXIS["Northing",NORTH],AUTHORITY["EPSG","3031"]]

- spatial_proj4 :

- +proj=stere +lat_0=-90 +lat_ts=-71 +lon_0=0 +k=1 +x_0=0 +y_0=0 +datum=WGS84 +units=m +no_defs

- spatial_epsg :

- 3031.0

array(b'', dtype='|S1')

- GDAL_AREA_OR_POINT :

- Area

- Conventions :

- CF-1.6

- date_created :

- 31-Mar-2020 01:45:13

- title :

- autoRIFT surface velocities

- author :

- Alex S. Gardner, JPL/NASA

- institution :

- NASA Jet Propulsion Laboratory (JPL), California Institute of Technology

- scene_pair_type :

- optical

- motion_detection_method :

- feature

- motion_coordinates :

- map

velocity_granule.v.plot(x='x', y='y')

<matplotlib.collections.QuadMesh at 0x7f4851bec550>

Data Processing¶

The most common use case for the velocity granules is to generate a time series. In order to create one, we need to concatenate multiple granules from the region of interest for the time we want information from. ITS_LIVE provides a processing module that will load all the velocity granules on a given directory and use a user defined geojson geometry to clip the files so just the data inside the geometry will be used to create the cube.

IMPORTANT:

load_cubeis not a lazy function. It will allocate granaules on memory in order to create the cube. This means that if we try to load 100,000 granules the kernel will most likely run out of memory.

Note: If we don’t use the map widget we can also use a handy function to get the geojson polygon from a bounding box.

The signature of the load_cube method is load_cube(project_folder, clip_geom=geometry, include_all_projections=False)

The parameters are:

project_folder: The file path pattern for NetCDF velocity files

clip_geom: gejson geometry dictionary, this geometry will be used to clip the files

include_all_projections: True or False, if True the cube will include granules on different UTM zones than the most common one for the selected area.

Note: The following cells are intended to work after multiple velocity granules from different years and the same region are downloaded.

from VelocityProcessing import VelocityProcessing as vp

# If we have a list of coordinates or a bbox we can get the correspondant geojson by

# geometry = vp.box_to_geojson([-49.79, 69.06, -48.55, 69.25])

# geometry = vp.polygon_to_geojson([(-48.55, 69.06),(-48.55, 69.25),(-49.79, 69.25),(-49.79, 69.06),(-48.55, 69.06)])

# The load_cube method needs a GeoJSON geometry to clip the region of interest.

geometry = {'type': 'Polygon',

'coordinates': [[[-101.155511, -74.738709],

[-99.117207, -75.087959],

[-99.879774, -75.46007],

[-102.42516, -74.925034],

[-101.155511, -74.738709]]]}

# if we are using the widget

# geometry = m.get_current_selection()['geometry']

geometry

{'type': 'Polygon',

'coordinates': [[[-101.155511, -74.738709],

[-99.117207, -75.087959],

[-99.879774, -75.46007],

[-102.42516, -74.925034],

[-101.155511, -74.738709]]]}

%%time

# File pattern for our curent search folder.

project_folder = 'data/pine-1996-2019/*.nc'

cube = vp.load_cube(project_folder,

clip_geom=geometry,

include_all_projections=True)

cube

CPU times: user 41.6 s, sys: 889 ms, total: 42.5 s

Wall time: 42.5 s

<xarray.Dataset>

Dimensions: (time: 200, x: 298, y: 400)

Coordinates:

* x (x) float64 -1.636e+06 -1.636e+06 ... -1.565e+06

* y (y) float64 -3.541e+05 -3.539e+05 ... -2.584e+05

* time (time) datetime64[ns] 1996-12-19 ... 2017-01-05

Polar_Stereographic int64 0

Data variables:

vx (time, y, x) float32 nan nan nan nan ... nan nan nan

vy (time, y, x) float32 nan nan nan nan ... nan nan nan

v (time, y, x) float32 nan nan nan nan ... nan nan nan

chip_size_width (time, y, x) float32 nan nan nan nan ... nan nan nan

chip_size_height (time, y, x) float32 nan nan nan nan ... nan nan nan

interp_mask (time, y, x) float64 nan nan nan nan ... 0.0 0.0 0.0

Attributes:

GDAL_AREA_OR_POINT: Area

Conventions: CF-1.6

date_created: 31-Mar-2020 05:44:18

title: autoRIFT surface velocities

author: Alex S. Gardner, JPL/NASA

institution: NASA Jet Propulsion Laboratory (JPL), Californi...

scene_pair_type: optical

motion_detection_method: feature

motion_coordinates: map

grid_mapping: Polar_Stereographic- time: 200

- x: 298

- y: 400

- x(x)float64-1.636e+06 ... -1.565e+06

- axis :

- X

- long_name :

- x coordinate of projection

- standard_name :

- projection_x_coordinate

- units :

- metre

array([-1635967.5, -1635727.5, -1635487.5, ..., -1565167.5, -1564927.5, -1564687.5]) - y(y)float64-3.541e+05 ... -2.584e+05

- axis :

- Y

- long_name :

- y coordinate of projection

- standard_name :

- projection_y_coordinate

- units :

- metre

array([-354112.5, -353872.5, -353632.5, ..., -258832.5, -258592.5, -258352.5])

- time(time)datetime64[ns]1996-12-19 ... 2017-01-05

array(['1996-12-19T00:00:00.000000000', '1997-01-29T00:00:00.000000000', '1997-02-11T00:00:00.000000000', '2000-02-02T00:00:00.000000000', '2000-12-21T00:00:00.000000000', '2001-01-09T00:00:00.000000000', '2001-11-24T00:00:00.000000000', '2001-12-02T00:00:00.000000000', '2001-12-08T00:00:00.000000000', '2001-12-09T00:00:00.000000000', '2002-12-05T00:00:00.000000000', '2002-12-19T00:00:00.000000000', '2002-12-21T00:00:00.000000000', '2002-12-23T00:00:00.000000000', '2002-12-31T00:00:00.000000000', '2003-01-06T00:00:00.000000000', '2003-01-14T00:00:00.000000000', '2003-01-22T00:00:00.000000000', '2003-01-29T00:00:00.000000000', '2003-01-31T00:00:00.000000000', '2003-02-04T00:00:00.000000000', '2003-02-07T00:00:00.000000000', '2003-02-09T00:00:00.000000000', '2003-02-20T00:00:00.000000000', '2004-02-09T00:00:00.000000000', '2005-01-27T00:00:00.000000000', '2006-12-22T00:00:00.000000000', '2006-12-24T00:00:00.000000000', '2006-12-30T00:00:00.000000000', '2007-11-14T00:00:00.000000000', '2007-11-28T00:00:00.000000000', '2007-11-30T00:00:00.000000000', '2007-12-18T00:00:00.000000000', '2008-01-26T00:00:00.000000000', '2008-12-02T00:00:00.000000000', '2008-12-06T00:00:00.000000000', '2008-12-11T00:00:00.000000000', '2008-12-19T00:00:00.000000000', '2008-12-21T00:00:00.000000000', '2008-12-22T00:00:00.000000000', '2008-12-26T00:00:00.000000000', '2008-12-30T00:00:00.000000000', '2009-01-04T00:00:00.000000000', '2009-01-21T00:00:00.000000000', '2009-02-05T00:00:00.000000000', '2009-02-07T00:00:00.000000000', '2009-03-01T00:00:00.000000000', '2009-11-20T00:00:00.000000000', '2009-11-27T00:00:00.000000000', '2009-11-28T00:00:00.000000000', '2009-12-14T00:00:00.000000000', '2010-01-08T00:00:00.000000000', '2010-01-15T00:00:00.000000000', '2010-01-24T00:00:00.000000000', '2010-12-10T00:00:00.000000000', '2010-12-13T00:00:00.000000000', '2010-12-20T00:00:00.000000000', '2010-12-26T00:00:00.000000000', '2011-01-04T00:00:00.000000000', '2011-01-11T00:00:00.000000000', '2011-01-14T00:00:00.000000000', '2011-01-20T00:00:00.000000000', '2011-01-25T00:00:00.000000000', '2011-01-27T00:00:00.000000000', '2011-02-04T00:00:00.000000000', '2012-11-29T00:00:00.000000000', '2012-12-11T00:00:00.000000000', '2012-12-12T00:00:00.000000000', '2012-12-15T00:00:00.000000000', '2012-12-22T00:00:00.000000000', '2012-12-24T00:00:00.000000000', '2012-12-27T00:00:00.000000000', '2012-12-31T00:00:00.000000000', '2013-01-20T00:00:00.000000000', '2013-12-19T00:00:00.000000000', '2013-12-20T00:00:00.000000000', '2013-12-24T00:00:00.000000000', '2013-12-27T00:00:00.000000000', '2013-12-28T00:00:00.000000000', '2014-10-27T00:00:00.000000000', '2014-11-12T00:00:00.000000000', '2014-11-28T00:00:00.000000000', '2014-11-29T00:00:00.000000000', '2014-12-14T00:00:00.000000000', '2014-12-15T00:00:00.000000000', '2014-12-17T00:00:00.000000000', '2014-12-23T00:00:00.000000000', '2014-12-31T00:00:00.000000000', '2015-01-05T00:00:00.000000000', '2015-01-16T00:00:00.000000000', '2015-01-21T00:00:00.000000000', '2015-01-24T00:00:00.000000000', '2015-02-01T00:00:00.000000000', '2015-02-09T00:00:00.000000000', '2015-02-25T00:00:00.000000000', '2015-11-08T00:00:00.000000000', '2015-12-08T00:00:00.000000000', '2016-01-18T00:00:00.000000000', '2016-12-12T00:00:00.000000000', '2017-01-05T00:00:00.000000000', '1996-12-19T00:00:00.000000000', '1997-01-29T00:00:00.000000000', '1997-02-11T00:00:00.000000000', '2000-02-02T00:00:00.000000000', '2000-12-21T00:00:00.000000000', '2001-01-09T00:00:00.000000000', '2001-11-24T00:00:00.000000000', '2001-12-02T00:00:00.000000000', '2001-12-08T00:00:00.000000000', '2001-12-09T00:00:00.000000000', '2002-12-05T00:00:00.000000000', '2002-12-19T00:00:00.000000000', '2002-12-21T00:00:00.000000000', '2002-12-23T00:00:00.000000000', '2002-12-31T00:00:00.000000000', '2003-01-06T00:00:00.000000000', '2003-01-14T00:00:00.000000000', '2003-01-22T00:00:00.000000000', '2003-01-29T00:00:00.000000000', '2003-01-31T00:00:00.000000000', '2003-02-04T00:00:00.000000000', '2003-02-07T00:00:00.000000000', '2003-02-09T00:00:00.000000000', '2003-02-20T00:00:00.000000000', '2004-02-09T00:00:00.000000000', '2005-01-27T00:00:00.000000000', '2006-12-22T00:00:00.000000000', '2006-12-24T00:00:00.000000000', '2006-12-30T00:00:00.000000000', '2007-11-14T00:00:00.000000000', '2007-11-28T00:00:00.000000000', '2007-11-30T00:00:00.000000000', '2007-12-18T00:00:00.000000000', '2008-01-26T00:00:00.000000000', '2008-12-02T00:00:00.000000000', '2008-12-06T00:00:00.000000000', '2008-12-11T00:00:00.000000000', '2008-12-19T00:00:00.000000000', '2008-12-21T00:00:00.000000000', '2008-12-22T00:00:00.000000000', '2008-12-26T00:00:00.000000000', '2008-12-30T00:00:00.000000000', '2009-01-04T00:00:00.000000000', '2009-01-21T00:00:00.000000000', '2009-02-05T00:00:00.000000000', '2009-02-07T00:00:00.000000000', '2009-03-01T00:00:00.000000000', '2009-11-20T00:00:00.000000000', '2009-11-27T00:00:00.000000000', '2009-11-28T00:00:00.000000000', '2009-12-14T00:00:00.000000000', '2010-01-08T00:00:00.000000000', '2010-01-15T00:00:00.000000000', '2010-01-24T00:00:00.000000000', '2010-12-10T00:00:00.000000000', '2010-12-13T00:00:00.000000000', '2010-12-20T00:00:00.000000000', '2010-12-26T00:00:00.000000000', '2011-01-04T00:00:00.000000000', '2011-01-11T00:00:00.000000000', '2011-01-14T00:00:00.000000000', '2011-01-20T00:00:00.000000000', '2011-01-25T00:00:00.000000000', '2011-01-27T00:00:00.000000000', '2011-02-04T00:00:00.000000000', '2012-11-29T00:00:00.000000000', '2012-12-11T00:00:00.000000000', '2012-12-12T00:00:00.000000000', '2012-12-15T00:00:00.000000000', '2012-12-22T00:00:00.000000000', '2012-12-24T00:00:00.000000000', '2012-12-27T00:00:00.000000000', '2012-12-31T00:00:00.000000000', '2013-01-20T00:00:00.000000000', '2013-12-19T00:00:00.000000000', '2013-12-20T00:00:00.000000000', '2013-12-24T00:00:00.000000000', '2013-12-27T00:00:00.000000000', '2013-12-28T00:00:00.000000000', '2014-10-27T00:00:00.000000000', '2014-11-12T00:00:00.000000000', '2014-11-28T00:00:00.000000000', '2014-11-29T00:00:00.000000000', '2014-12-14T00:00:00.000000000', '2014-12-15T00:00:00.000000000', '2014-12-17T00:00:00.000000000', '2014-12-23T00:00:00.000000000', '2014-12-31T00:00:00.000000000', '2015-01-05T00:00:00.000000000', '2015-01-16T00:00:00.000000000', '2015-01-21T00:00:00.000000000', '2015-01-24T00:00:00.000000000', '2015-02-01T00:00:00.000000000', '2015-02-09T00:00:00.000000000', '2015-02-25T00:00:00.000000000', '2015-11-08T00:00:00.000000000', '2015-12-08T00:00:00.000000000', '2016-01-18T00:00:00.000000000', '2016-12-12T00:00:00.000000000', '2017-01-05T00:00:00.000000000'], dtype='datetime64[ns]') - Polar_Stereographic()int640

- crs_wkt :

- PROJCS["WGS 84 / Antarctic Polar Stereographic",GEOGCS["WGS 84",DATUM["WGS_1984",SPHEROID["WGS 84",6378137,298.257223563,AUTHORITY["EPSG","7030"]],AUTHORITY["EPSG","6326"]],PRIMEM["Greenwich",0,AUTHORITY["EPSG","8901"]],UNIT["degree",0.0174532925199433,AUTHORITY["EPSG","9122"]],AUTHORITY["EPSG","4326"]],PROJECTION["Polar_Stereographic"],PARAMETER["latitude_of_origin",-71],PARAMETER["central_meridian",0],PARAMETER["false_easting",0],PARAMETER["false_northing",0],UNIT["metre",1,AUTHORITY["EPSG","9001"]],AXIS["Easting",NORTH],AXIS["Northing",NORTH],AUTHORITY["EPSG","3031"]]

- semi_major_axis :

- 6378137.0

- semi_minor_axis :

- 6356752.314245179

- inverse_flattening :

- 298.257223563

- reference_ellipsoid_name :

- WGS 84

- longitude_of_prime_meridian :

- 0.0

- prime_meridian_name :

- Greenwich

- geographic_crs_name :

- WGS 84

- horizontal_datum_name :

- World Geodetic System 1984

- projected_crs_name :

- WGS 84 / Antarctic Polar Stereographic

- grid_mapping_name :

- polar_stereographic

- standard_parallel :

- -71.0

- straight_vertical_longitude_from_pole :

- 0.0

- false_easting :

- 0.0

- false_northing :

- 0.0

- spatial_ref :

- PROJCS["WGS 84 / Antarctic Polar Stereographic",GEOGCS["WGS 84",DATUM["WGS_1984",SPHEROID["WGS 84",6378137,298.257223563,AUTHORITY["EPSG","7030"]],AUTHORITY["EPSG","6326"]],PRIMEM["Greenwich",0,AUTHORITY["EPSG","8901"]],UNIT["degree",0.0174532925199433,AUTHORITY["EPSG","9122"]],AUTHORITY["EPSG","4326"]],PROJECTION["Polar_Stereographic"],PARAMETER["latitude_of_origin",-71],PARAMETER["central_meridian",0],PARAMETER["false_easting",0],PARAMETER["false_northing",0],UNIT["metre",1,AUTHORITY["EPSG","9001"]],AXIS["Easting",NORTH],AXIS["Northing",NORTH],AUTHORITY["EPSG","3031"]]

- GeoTransform :

- -1636087.5 240.0 0.0 -354232.5 0.0 240.0

array(0)

- vx(time, y, x)float32nan nan nan nan ... nan nan nan nan

- units :

- m/y

- standard_name :

- x_velocity

- stable_count :

- 17131.0

- stable_shift :

- 2.0

- stable_shift_applied :

- 2.0

- stable_rmse :

- 11.5

- stable_apply_date :

- 737881.2390999333

- map_scale_corrected :

- 1

- grid_mapping :

- Polar_Stereographic

- _FillValue :

- -9999.0

array([[[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., ... ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]]], dtype=float32) - vy(time, y, x)float32nan nan nan nan ... nan nan nan nan

- units :

- m/y

- standard_name :

- y_velocity

- stable_count :

- 17131.0

- stable_shift :

- 2.0

- stable_shift_applied :

- 2.0

- stable_rmse :

- 28.17

- stable_apply_date :

- 737881.2390999491

- map_scale_corrected :

- 1

- grid_mapping :

- Polar_Stereographic

- _FillValue :

- -9999.0

array([[[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., ... ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]]], dtype=float32) - v(time, y, x)float32nan nan nan nan ... nan nan nan nan

- units :

- m/y

- standard_name :

- velocity

- map_scale_corrected :

- 1

- best_practice :

- velocities should always be merged/averaged using component velocities to prevent high velocity magnitude bias

- grid_mapping :

- Polar_Stereographic

- _FillValue :

- -9999.0

array([[[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., ... ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]]], dtype=float32) - chip_size_width(time, y, x)float32nan nan nan nan ... nan nan nan nan

- units :

- m

- chip_size_coordinates :

- image projection geometry: width = x, height = y

- standard_name :

- chip_size_width

- grid_mapping :

- Polar_Stereographic

- _FillValue :

- -9999.0

array([[[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., ... ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]]], dtype=float32) - chip_size_height(time, y, x)float32nan nan nan nan ... nan nan nan nan

- units :

- m

- chip_size_coordinates :

- image projection geometry: width = x, height = y

- standard_name :

- chip_size_height

- grid_mapping :

- Polar_Stereographic

- _FillValue :

- -9999.0

array([[[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., ... ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]]], dtype=float32) - interp_mask(time, y, x)float64nan nan nan nan ... 0.0 0.0 0.0 0.0

- units :

- binary

- standard_name :

- interpolated_value_mask

- grid_mapping :

- Polar_Stereographic

- _FillValue :

- -9999.0

array([[[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [nan, nan, nan, ..., 0., 0., 0.], [nan, nan, nan, ..., 0., 0., 0.], [nan, nan, nan, ..., 0., 0., 0.]], [[ 0., 0., 0., ..., nan, nan, nan], [ 0., 0., 0., ..., nan, nan, nan], [ 0., 0., 0., ..., nan, nan, nan], ..., [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., ... ..., [ 0., 0., 0., ..., 0., 0., 0.], [ 0., 0., 0., ..., 0., 0., 0.], [ 0., 0., 0., ..., 0., 0., 0.]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [ 0., 0., 0., ..., 0., 0., 0.], [ 0., 0., 0., ..., 0., 0., 0.], [ 0., 0., 0., ..., 0., 0., 0.]], [[nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], [nan, nan, nan, ..., nan, nan, nan], ..., [ 0., 0., 0., ..., 0., 0., 0.], [ 0., 0., 0., ..., 0., 0., 0.], [ 0., 0., 0., ..., 0., 0., 0.]]])

- GDAL_AREA_OR_POINT :

- Area

- Conventions :

- CF-1.6

- date_created :

- 31-Mar-2020 05:44:18

- title :

- autoRIFT surface velocities

- author :

- Alex S. Gardner, JPL/NASA

- institution :

- NASA Jet Propulsion Laboratory (JPL), California Institute of Technology

- scene_pair_type :

- optical

- motion_detection_method :

- feature

- motion_coordinates :

- map

- grid_mapping :

- Polar_Stereographic

# Histogram of velocities, useful to discard outliers.

cube.v.plot.hist()

(array([5.105220e+05, 2.417960e+05, 2.363840e+05, 5.503860e+05,

1.184144e+06, 9.083740e+05, 1.170000e+04, 2.360000e+02,

7.400000e+01, 5.600000e+01]),

array([ 0. , 789.5, 1579. , 2368.5, 3158. , 3947.5, 4737. , 5526.5,

6316. , 7105.5, 7895. ], dtype=float32),

<BarContainer object of 10 artists>)

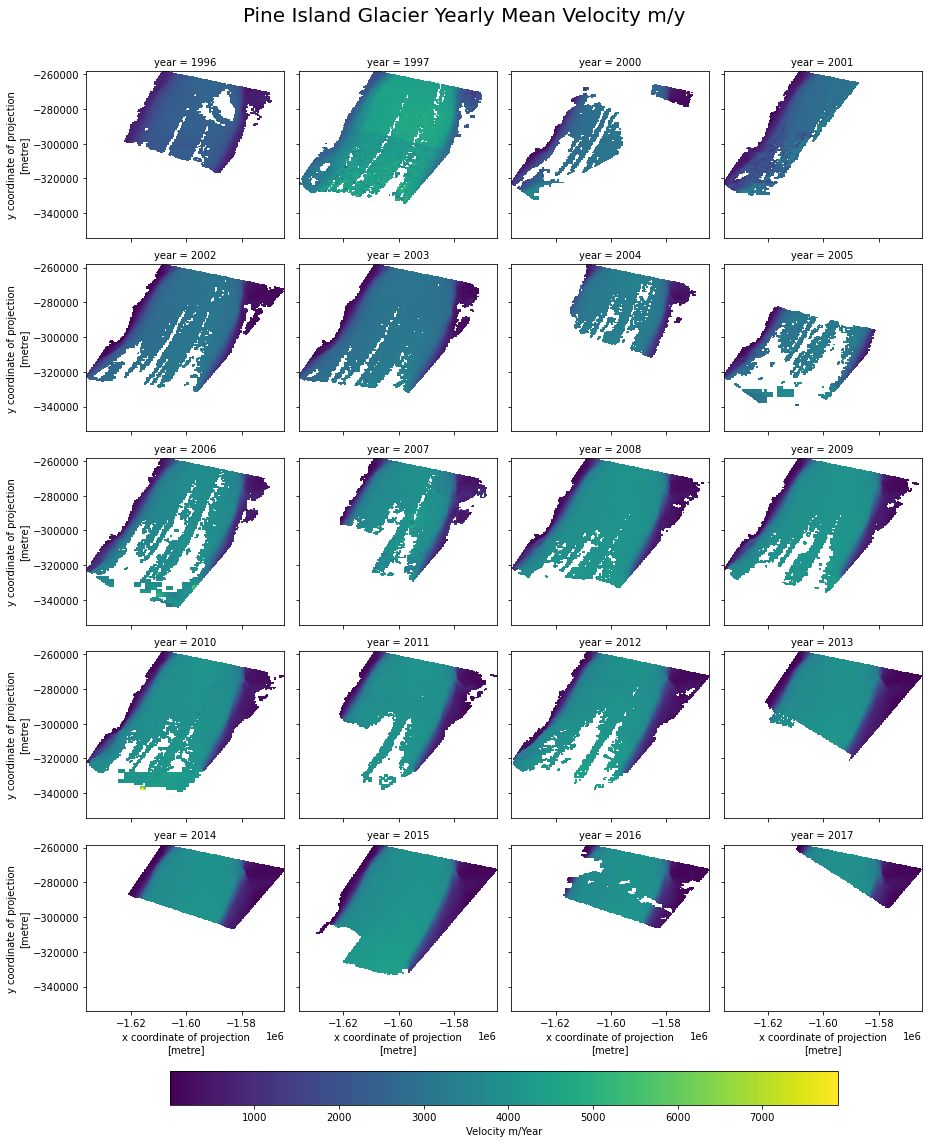

# We can now use xarray operations to calculate means/max etc and plot them over time.

cube_yearly = cube.groupby('time.year').mean()

plot = cube_yearly.v.plot(x='x',

y='y',

col='year',

col_wrap=4,

cbar_kwargs=dict(

orientation= 'horizontal',

shrink=0.8,

anchor=(0.5, -0.8),

label='Velocity m/Year')

)

plot.fig.subplots_adjust(top=0.92)

plot.fig.suptitle("Pine Island Glacier Yearly Mean Velocity m/y",

fontsize=20, fontdict={"weight": "bold"})

Text(0.5, 0.98, 'Pine Island Glacier Yearly Mean Velocity m/y')